We just shipped KickoffLabs Smart A/B Testing! This is going to double the number of conversions captured for customers running A/B tests. Want to learn how? Read on…

Smarter A/B Testing is now the default!

Some background about A/B testing

We were very reluctant to add A/B testing to KickoffLabs. It’s a pretty recent addition for us.

We firmly believed in the principals behind A/B testing and run experiments at KickoffLabs all the time. However, we’ve always had three major concerns that we don’t believe any solution has really addressed.

- Customers tend to focus on the testing and ignore how important it is to simply generate traffic and write compelling copy. A 5% conversion rate improvement is great unless it’s only impacting 100 unique views in a month. Then it’s only 5 more leads.

- To see statistically relevant results you need to generate quite a bit of traffic. It’s easy to call tests too early and not let them run long enough to see if you have really made a difference or have just seen a bump because it’s new and not actually better.

- Testing radical changes comes with the risk of losing conversions. If you have a great landing page, that converts at 35%, and you start testing one that converts at 10%… you lost a LOT of potential conversions just to find out you came up with a terrible variation.

When we finally released A/B testing at KickoffLabs we intentionally kept it simple. Our goal was not to compete head to head with dedicated A/B platforms like VWO and Optimizely. We simply wanted to see how our customers would use the tests and how we could improve their results over time.

Turns out that our customers love the feature, but a lot of them still see the three issues listed above. People see favorable results and call tests too early that are not statistically relevant and they also lose a lot of conversions with bad tests.

How could we stop the madness?

Fast forward six months. Josh and I were both in attendance at Microconf 2015 when the always excellent Joanna Wiebe of CopyHackers was dropping some impressive copy knowledge. In passing she mentioned Multi-Arm Bandit Experiments.

The name “multi-armed bandit” describes a hypothetical experiment where you face several slot machines (“one-armed bandits”) with potentially different expected payouts. You want to find the slot machine with the best payout rate, but you also want to maximize your winnings. The fundamental tension is between “exploiting” arms that have performed well in the past and “exploring” new or seemingly inferior arms in case they might perform even better. There are highly developed mathematical models for managing the bandit problem, which we use in Google Analytics content experiments.

We (ok, I) quickly read the research and knew this is exactly what we needed to help customers make the most of A/B testing with limited traffic.

So we built it!

What makes this a smarter A/B testing solution?

Every lead is vitally important to your business. You work very hard to get those potential leads to your landing pages. A/B testing does an excellent job of helping you optimize your conversion process. However, an unfortunate consequence of this is that some of your potential leads are lost in the validation process.

Using the Multi-Arm Bandit algorithm helps minimize this waste. Our early calculations proved that it could lead to nearly double the actual number of conversions for our customers running A/B tests.

You can now have your cake and eat it too.

What do I have to do to use it?

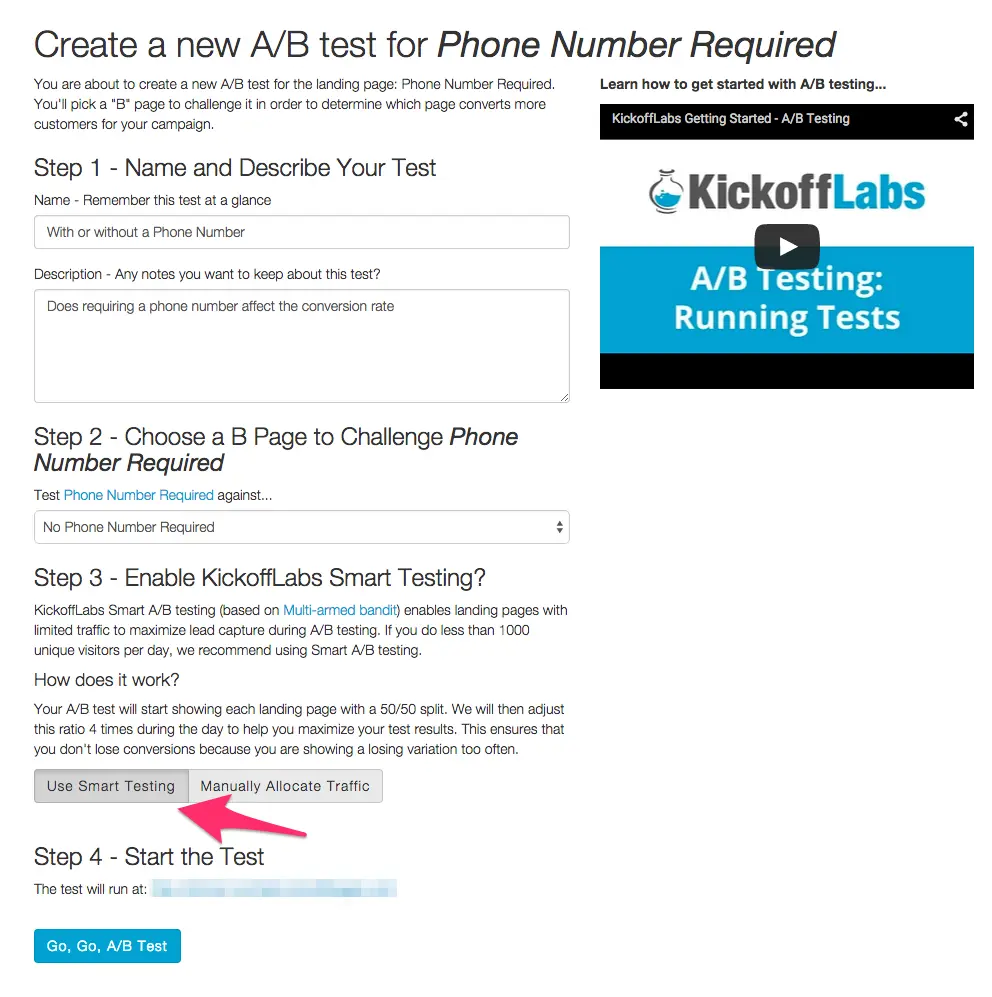

Nothing. Simply create a new A/B test and keep all the defaults.

I fear SkyNet and want to control my own A/B weighting

No problem. Just click the ‘use manual weighting’ checkbox and you are back in the drivers seat.